Facilities

Our Department is home to outstanding facilities for use in our internationally-renowned research and our courses at all levels of study.

As part of our Department's research into e-Science, robotics and interaction analysis, we have dedicated facilities for each of these research areas.

Our base in the Kilburn Building is home to modern lecture theatres, dedicated common rooms for undergraduates, postgraduates and staff, as well as laboratories for collaborative study.

In addition to this, the University benefits from leading computing facilities, featuring:

- over 300 computers exclusively for the use of our students;

- collaborative working labs complete with specialist computing and audio visual equipment to support group working;

- access to a range of integrated development environments;

- specialist electronic system design and computer engineering tools.

We are working hard to ensure we are able to provide safe access to these facilities in line with the latest government advice. The safety and wellbeing of our staff and students is our highest priority and we will be providing health and safety briefings as part of your induction.

Explore some of our dedicated facilities below.

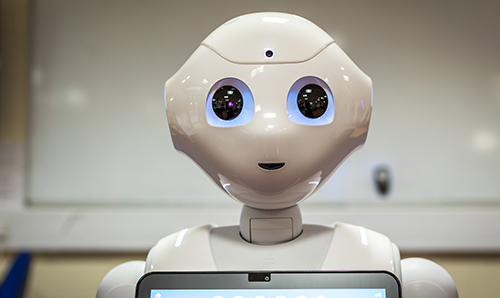

Cognitive Robotics Laboratory

The integration of machine learning and AI methods for training robots' cognitive and social skills.

Interaction Analysis and Modelling Laboratory

Work in the IAM Lab allows us to examine how users interact with the web, and how the web enables users to interact with it.

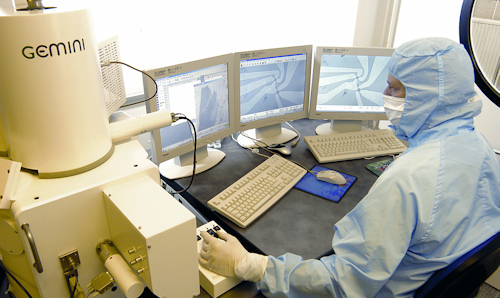

Mesoscience and nanotechnology workshop

Our nanoworkshop has extensive facilities that allow us to fabricate, visualise and characterise structures and devices containing individual elements.

Autonomy and Verification Robot Laboratory

The lab allows members of the group to investigate autonomy and verification of autonomous systems in practical settings alongside key issues such as ethics, responsibility and trustworthiness.