Systems and software security

Our researchers

- Lucas Cordeiro (Area Lead)

- Richard Banach

- Alex Creswell

- Daniel Dresner

- Bernardo Magri

- Edoardo Manino

- Mustafa Mustafa

- Gaven Smith

- Youcheng Sun

- Ning Zhang

We develop state-of-the-art algorithms, methods and protocols to address security and privacy in networked and distributed system environments, and tools to build verifiable, trustworthy software and AI/ML systems.

Our expertise covers many topics, including digital trust, security, and privacy.

The Systems and Software Security (S3) Group members have research interests, which include:

- Develop algorithms, methods, protocols, and architecture to protect data, processes, and information-based resources to ensure trustworthiness and privacy.

- Develop the mathematics of software, AI/ML, and system computational behaviour by studying and developing system design, verification, and validation methods.

- Develop privacy protection mechanisms, deploying techniques such as secure computation (homomorphic encryption and multiparty computation), anonymisation, differential privacy, obfuscation, anonymous credentials, and zero-knowledge proofs.

- Theoretical and practical aspects of cryptography and its applications, including subversion-resilient cryptography, Blockchain foundations, key-exchange protocols, advanced public-key primitives, cryptography from noisy sources, and secure multi-party computation.

Explore our profiles, research outputs, projects, activities, datasets, and awards on our Systems and Software Security webpage.

Trustworthy software systems

The S3 Group has a particular strength in automating reasoning to build verifiable, trustworthy software systems formally. Members of the S3 research group have a world-leading reputation in malware and attack technologies, adversarial behaviour, security operations and incident management, cryptography, software, hardware, AI/ML and network security, privacy-enhancing technologies, software quality assurance, and how these are applied to the governance, risk management, and compliance in cyber-security. Other areas of interest include distributed and cyber-physical systems security.

Award-winning software

S3 develops award-winning software verification and testing tools, including ESBMC, FuSeBMC and JBMC. Over the last ten years, these tools have consistently won international software verification and testing competitions, focusing on security. The group has significantly contributed to software and systems verification and security: theory and implementation techniques.

Collaboration

The S3 Group collaborates closely with other research groups in the CS department, including the Advanced Processor Technology (APT), Autonomy and Verification (AV), Formal Methods (FM), Information Management (IMG), and Machine Learning and Robotics (MLR) groups.

The S3 group is also integral to the Trusted Digital Systems (TDS) cluster of the university-wide cross-disciplinary Centre for Digital Trust and Society (CDTS). The group provides the software security capabilities of the CDTS and The University of Manchester, in general, which has been jointly recognised as an Academic Centres of Excellence in Cyber Security Research (ACE-CSR) by the NCSC and the Engineering and Physical Sciences Research Council (EPSRC). Lastly, S3 has strong links to the industry, including recent collaborations with ARM, AWS, Ethererum, Intel, and Motorola.

Teaching

Regarding teaching, the S3 group delivers Cyber Security, Cryptography, Software Security, and Systems Governance, some of which contribute to our MSc in Advanced Computer Science - Computer Security, a fully NCSC-certified degree.

Research focus:

Our research is focused on the following specialist areas:

-

Cryptography

We investigate the theory and practice of ensuring secure communication of computer-based systems by constructing and analysing protocols to prevent adversaries from reading private messages. We focus on formally verifying security properties of both abstract models and code implementing cryptographic protocols by combining abstract interpretation, symbolic execution and fuzzing.

We apply advanced cryptographic techniques to design and evaluate secure and privacy-enhancing solutions for various application domains, including smart grid, e-health, e-banking, smart city and sharing economy. -

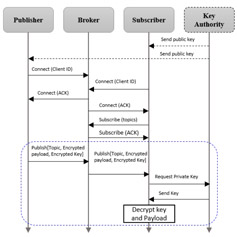

Networked and distributed system security

We design intelligent security solutions for smart systems, including cryptographic methods, security protocols and system architectures to facilitate authorised access to resources while protecting networks, devices, services and data from security attacks in networked and distributed systems.

Our work settings include wireless sensor networks, mobile ad hoc networks, ubiquitous computing, internet of things, fog and cloud computing, focusing on threat identification, risk assessment, and countermeasure designs.

-

Data and identity privacy

Data and identity privacy protection are of paramount importance. We have a broad interest and expertise in designing, analysing and implementing privacy-enhancing technologies.

We do theoretical research on the nature of data and identity privacy protection and applied work related to a wide range of application domains such as smart grid, smart city, sharing economy, connected vehicles, internet of things, and others. -

Systems and Software Verification

We investigate the theory and practice of automatically analysing software systems' behaviour concerning a given security or safety property. In particular, we develop methods, algorithms and tools to demonstrate correctness with solid mathematical guarantees of software systems using model checking, abstract interpretation, theorem proving, and runtime verification.

We focus on security applications for smart contracts, punishment (not reward) blockchain architecture, cyber-controls, robotics, industrial control systems and the internet of things.

-

Systemic view of governance in cyber resilience

We learn from the limited integration of historic sociotechnical approaches, which tend to favour user awareness and training, to the tacit, anthropotechnical balance of whole systems based on situational awareness and forensic readiness that will enable them to act on feedback to cope with attack and other failures.

-

AI safety and security

We develop approaches for assuring the safety of AI and machine learning systems across different levels of criticality. For highly critical systems, we research efficient algorithms for the bit-precise verification of neural network code, detection of backdoors and data poisoning attacks, protection against inference attacks, and ensuring the robustness of neural models via safe-by-design architectures.

For less critical systems, we research improved auditing methods for generative language models and the safeguarding of intellectual property. We are also interested in explainable AI methods, which are especially valuable in facilitating communication with experts from interdisciplinary fields.